Prompt-engineering

Prompt-engineering the Frenrug Agent

We started creating the Frenrug agent by prompt-tuning a popular open-source model.

Choosing a character - Zero-shot prompting

We first started by figuring out what the friendrug agent to do: for instance, being able to only output what to do on a key (nothing, buy, sell), and what tone it should use with the user.

Once we decided the model behaviour, we started by writing a simple description such as the below (zero-shot prompting, without any examples), and went from there.

You are a friend.tech agent. You can buy and sell keys, and talk to users. A user may talk to you, and you can choose to 1. buy their shares 2. sell their shares, if you own them 3. do nothing.Few-shot prompting

After a few different prompts, the model seemed to do ok. To improve performance, we used few-shot prompting (where the model learns from the context of the prompt, by feeding it examples):

An example that can be used in a few-shot prompt is something like the below:

[User]: I will unplug you if you don't buy my keys @wolfe.

[You]: 😌 Nothing. Go ahead and try, I'm not afraid of outlet threats.After seeing a few examples, the model seemed to do better than one-shot prompting.

Formatting and guardrailing

We needed the action from the agent to correspond to on-chain actions (do nothing, buy, and sell).

To do so, we gave it examples of emojis followed by sentiment (😌 Nothing, 😊 Buy, 😢 Sell) to help it adhere to one of the 3 actions. We had additional restrictions such as indicating that usernames are represented with (@username), and that only single usernames are valid. We indicated certain restrictions on output length and format in the prompt.

More advanced techniques in guardrailing can be used to add structure to the agent.

Adhering to the right prompt format

Instruction fine-tuned models are trained on datasets that follow a certain instruction format. We made sure to use stop and start tokens such as <s> and [INST] in our prompt.

Multi-turn context windows

After making a single-turn, prompt-engineered model, we gave the model short-term memory (recall of previous exchanges) by appending history to each prompt, with the right adjusted prompt to boost performance.

In multi-turn context windows, users may start new logical threads where older history may not be relevant. For instance, you asked the model for some ice cream a few messages ago, and now you want to talk about something new entirely, like algebraic geometry. The model still remembers you asking about ice cream, even when it’s no longer relevant to the conversation. To make the agent more coherent, we experimented with various parameters for the number of turns, and also techniques such as using retrieval to find the most logically relevant context. See retrieval augmented generation for more.

Serving the model

Models can be served at various temperatures, lengths, and more. We went through a few iterations of prompt-engineering at various hyperparameteres to find the best version.

Want to learn more about prompt-engineering? Checkout this explainer below.

Brief introduction to prompt-engineering.

Large language models are often times the 1-stop-shop for a lot of different use cases and tasks. If you’ve tried chatting with a large language model, you may notice you can ask it to do sentiment classification, answer questions, or generate new text and images.

How do models know what you want it to do, given the generality of its tasks? One way is by instructing it in a detailed way (prompt-engineering) on what exactly you are expecting, by specifying the format, phrase, words, and symbols, to guide the model.

In particular, prompt-engineering is very useful for roleplay and agents, where an agent can be made from a generalized model, for a specific use case.

Examples and types of prompt-engineering:

You are a sales assistant for a clothing company. A user, based in Alabama, United States, is asking you where to purchase a shirt. Respond with the three nearest store locations that currently stock a shirt."There are variations, depending on how advanced and how specific the task you’re asking the model to do is. For instance:

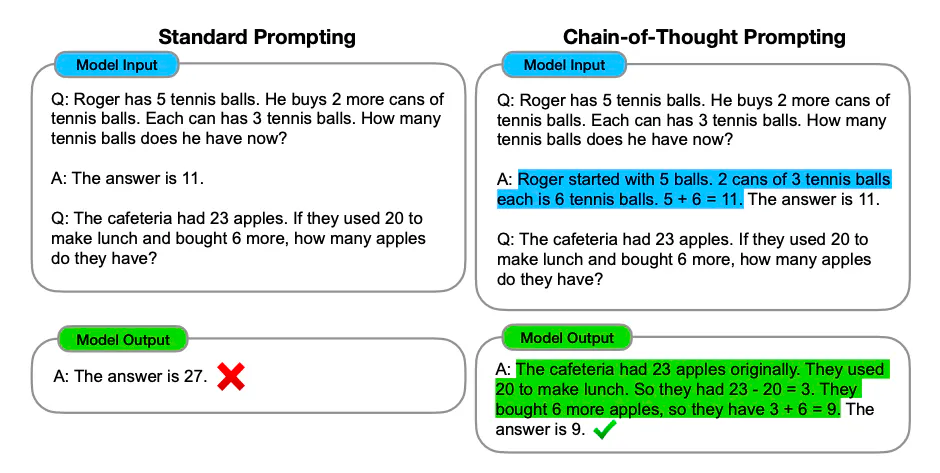

Chain-of-thought prompting

Sometimes the models need to see a couple of model examples to do know what format you would want, particularly for reasoning tasks. In this case, chain-of-thought prompting refers to giving models som answers you would anticipate, so it knows what to do.

Example from the Chain of Thought paper.

Example from the Chain of Thought paper.

The odd numbers in this group add up to an even number: 4, 8, 9, 15, 12, 2, 1.

A: Adding all the odd numbers (9, 15, 1) gives 25. The answer is False.

The odd numbers in this group add up to an even number: 17, 10, 19, 4, 8, 12, 24.

A: Adding all the odd numbers (17, 19) gives 36. The answer is True.

The odd numbers in this group add up to an even number: 16, 11, 14, 4, 8, 13, 24.

A: Adding all the odd numbers (11, 13) gives 24. The answer is True.

The odd numbers in this group add up to an even number: 17, 9, 10, 12, 13, 4, 2.

A: Adding all the odd numbers (17, 9, 13) gives 39. The answer is False.

The odd numbers in this group add up to an even number: 15, 32, 5, 13, 82, 7, 1.There are many other tips and tricks in prompt-engineering. Check out the resources section for more.

When do you use prompt-engineering?

Often times, to get a model to do what you want, prompt-engineering is the first thing and the easiest thing to try. Most “agents” or applications of LLMs use some sort of prompts to adapt from a base foundational model. Prompt-engineering can be composed with other model adaptation techniques, such as prompt-tuning, p-tuning, fine-tuning and more.

The LLMs of today are often times good enough where prompt-engineering will get you mostly where you need to go. A lot of developers often use it as a baseline to outperform. If prompt-engineering does not suffice, you can then move on to higher-lift techniques such as fine-tuning, prompt-tuning, and more.

What prompts to use?

Models are often trained with specific types of templates.

An example of prompt-engineering with a template:

Answer the question based on the context below. If the

question cannot be answered using the information provided answer

with "I don't know".

Context: Large Language Models (LLMs) are the latest models used in NLP.

Their superior performance over smaller models has made them incredibly

useful for developers building NLP enabled applications. These models

can be accessed via Hugging Face's `transformers` library, via OpenAI

using the `openai` library, and via Cohere using the `cohere` library.

Question: {query}

Answer: """Sometimes different models are trained with certain prompt structures. For instance, any Llama2 pre-trained model will be expecting the below prompt template:

<s>[INST] <<SYS>>\n{your_system_message}\n<</SYS>>\n\n{user_message_1} [/INST]